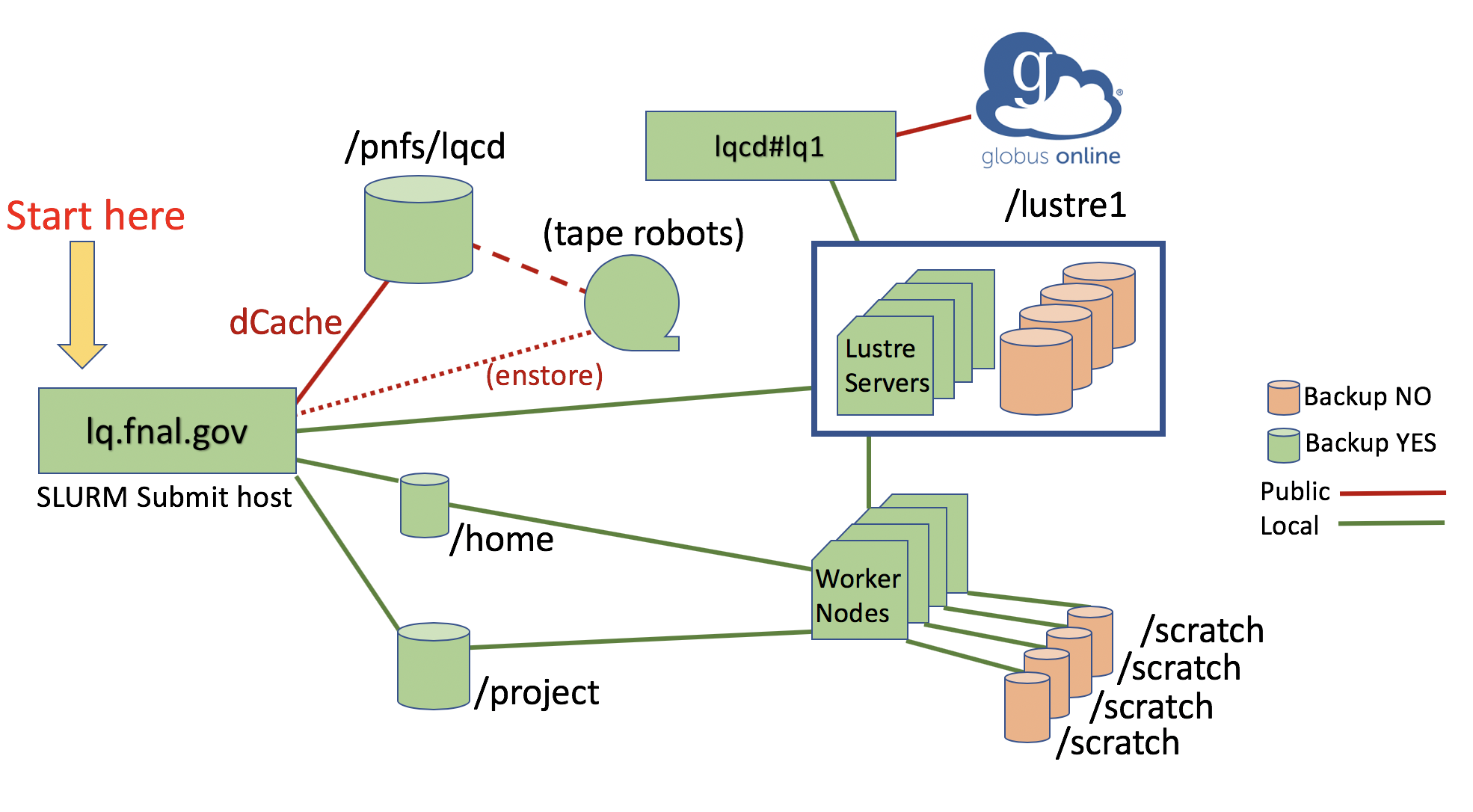

The various filesystems available on the Fermilab LQCD Computing Facility are listed in the table below:

/home Filesystem

Each user has a home directory on the LQ1 cluster. This home directory is mounted over NFS on all the worker nodes. For the most part, users do not have to worry about home directories in general. The user quota on these disks is several GBs and this space is backed up nightly.

Lustre Filesystem

Lustre is a scalable, secure, robust, highly-available cluster file system. The current Lustre Portable Operating System Interface (POSIX) is comparable with NFS and Lustre filesystem supports MPI-IO as well.

The Lustre Filesystem is mounted at /lustre1 and your project will have a subdirectory under the main mount point depending on your disk allocation. This filesystem is not backed up and is meant to be used as a volatile storage space. Quotas are enforced on the file system and count against your disk allocation. Please send an email to lqcd-admin@fnal.gov to apply for a top-level directory for your project and for quota maintenance.

One of the main factors leading to the high performance of Lustre™ file systems is the ability to stripe data over multiple OSTs. The stripe count can be set on a file system, directory, or file level. See the Lustre Striping Guide for more information. Please refer to the following page for more details about the various Lustre user commands. Our Lustre version for /lustre1 is 2.12.2.

Quotas in Lustre

Storage quotas in Lustre are set according to the approved Allocation. Type A & B allocations are set by the SPC and have a specified limit (typically >= 1 TB). Type C allocations and opportunistic projects are set by the site liaison (typically < 1TB).

Lustre quotas are set and accounted for based on Unix group ownership. Your top-level directory will be owned by a group that matches your project name. We set the “sticky bit” so that all files and directories created under that top-level are owned by that group. Files from tape or from expanding tarballs sometimes show the original group ownership. Site managers regularly run an audit script that corrects their group ownership and sets directory sticky bits as needed. Files owned by your group anywhere within this Lustre filesystem count against your group quota.

Because Lustre is optimized for large files, we have implemented an inode limit or max number of files. This limit is based on a step function. If you need more files than these limits, you should be using /scratch on a worker node or /project.

| Low (>) | High (<=) | Number of files |

| 0 | 5 TB | 1.0 M |

| 5 TB | 50 TB | 2.5 M |

| 50 TB | 100 TB | 5.0 M |

| 100 TB | 150TB | 7.5 M |

| 150 TB | 10.0 M |

Globus file transfers to Lustre

Files transfers are managed via the Globus data transfer portal. You will need to login to Globus via CILogon using your home institution identity, or another identity provider such as Orcid.. The Globus transfer endpoint to the LQCD Lustre file system is called “Fermilab LQCD Globus Endpoint for /lustre1“. You must authenticate to the Fermilab endpoint using CILogon. If you use Fermilab as your CILogon institution your identity is automatically recognized by the Fermilab endpoint. Users that opt for an institution other than Fermilab in CILogon will not be able to transfer files until they register this CILogon identity with the Fermilab sysadmins. You can find your CILogon identity by authenticating at the CILogon site and then selecting the tab “Certificate Information”. The information we need will be similar to:

Certificate Subject: /DC=org/DC=cilogon/C=US/O=University of Southern North Dakota at Hoople/OU=People/CN=P.D.Q. Bach/CN=UID:pdqOpen a Fermilab Service desk request ticket using this link (services account password is needed). The short description should be “Add my CILogon identity to the LQCD gridmap file” and paste your ticket information into the request details. It can take one to two business days to set up your account.

External Lustre documentation

- Lustre User Guide

- Lustre Best Practices Guide from NASA

/project Filesystem

This limited storage area (just 5 TB) is typically used for approved projects. This filesystem is accessible from all cluster worker nodes via NFS as /project using IP-over-Infiniband and is automatically backed up every night. This storage space is suitable for output logs, meson correlators, and other small data files and it should NOT be used for storing fields e.g configurations, quark propagators.

Quotas in /project

Since the /project area is a ZFS filesystem, it is more appropriate for a larger number of small files, when compared to Lustre. Storage quotas are set by the site managers. All Type A & B allocations get space in /project so that they can keep small files out of Lustre. Type A allocations typically get 100 GB. Type B allocations typically get 50GB. All others get space if requested, typically 25 GB or less. We do not set a limit on number of files under /project.

/pnfs/lqcd Filesystem

/pnfs/lqcd is the central disk cache with tape backup facility and is intended for permanent storage of parameter files and results. Although this appears to be a standard disk area, it is not. Commands to manipulate file and directory metadata (for example, rm, mv, chmod, mkdir, rmdir) will work here but commands like cp or cat will not. Instead, you will need to use dccp to copy files in and out of this area. dccp has syntax like cp, i.e.

dccp source destination

| Area | Description |

| /project | Area typically used for approved projects. Visible on all cluster worker nodes via NFS file system. Backups nightly. Suitable for output logs, meson correlators, and other small data files. NOT suitable for fields e.g configs, quark propagators. |

| /home | Home area. Backups nightly. Visible on all cluster worker nodes via NFS. Not suitable for configs or props. Can be used as a “run” directory for light production or testing. A quota of about 6 to 10 GB per home directory. Run zfsquota on lq.fnal.gov to check your home area disk usage. |

| /pnfs/lqcd | Enstore Tape storage. Visible on cluster login head nodes only. Ideal for permanent storage of parameter files and results. Must use special copy command: ‘ dccp‘ |

| /lustre1 | Lustre storage. NO backups. Visible on all cluster worker nodes. Ideal for temporary storage (~month) of very large data files. NOT suitable for large number of small files. Disk space usage monitored and disk quotas enforced. |

| /scratch | Local disk on each worker node. NO backups. 892GB in size. Suitable for writing all sorts of data created in a batch job. Any precious data needs to be copied off of this area before a job ends since the area is automatically purged at the end of a job. |

| Globus | Globus End-Point named lqcd#lq1 which you can use to transfer data in and out of LQ1. Please refer to the documentation on globus.org on setting up a Globus Connect Personal if needed. |

/scratch Filesystem

Each worker node has a private locally mounted filesystem called /scratch which is available to a batch job. Each LQ1 worker has 892 GB of space on /scratch. Scratch is the recommended place to write data of all sorts created in a batch job. Any precious data needs to be copied off of /scratch before a job ends since the area is automatically purged at the end of a job.