Important considerations about home directories in /nashome

- You must have ssh delegate (forward) your Kerberos ticket when you login to the cluster, otherwise, your home directory in the /nashome filesystem will not be accessible.

- Do not launch batch jobs from /nashome. They will silently fail without a trace due to not having a valid Kerberos ticket!

- Batch jobs should not depend on access to /nashome in any way including software intended to be used in batch jobs. Many software packages including Python, conda, pip, Julia, R, Spack, EasyBuild, and Apptainer use (hidden) directories in $HOME by default. Users should make use of relevant shell environment variables specific to such packages to place these directories in their work directory in the /work1 filesystem.

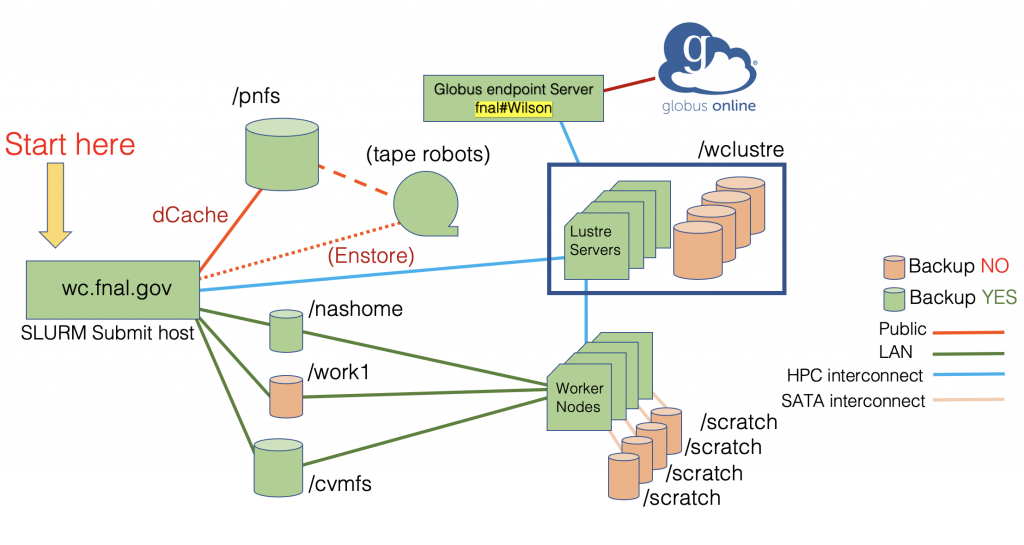

filesystems available on the Wilson Cluster

The various filesystems are listed in the table below

| Area | Description |

| /nashome | User home area. Quota limit per user = 5 GB. By default, every user with a valid account on WC-IC has a home directory under this area. Backup: YES |

| /work1 | Project data area. Permissions and quota managed by GID/group. Default quota limit per project = 25GB. Projects may request additional space using the Project and User Requests forms. Backup: NO |

| /cvmfs | CernVM File System. Access to HEP software from WC-IC login hosts and worker nodes. |

| /wclustre | Lustre storage. Default quota limit per group = 100GB. Visible on all cluster worker nodes. Ideal for temporary storage (~month) of very large data files. Because Lustre is optimized for large files, groups of small files should be packed into larger tar files. Disk space usage is monitored, and disk quotas enforced. One of the main factors leading to the high performance of Lustre™ file systems is the ability to stripe data over multiple OSTs. The stripe count can be set on a file system, directory, or file level. See the Lustre Striping Guide for more information. Backup: NO |

| /scratch | Local scratch disk on each worker node. 289GB in size. Suitable for writing all sorts of data created in a batch job. Offers the best performance. Any precious data needs to be copied off of this area before a job ends since the area is automatically purged at the end of a job. Backup: NO |

| /pnfs/fermigrid | Fermigrid volatile dCache storage. Visible on cluster submit host only. Ideal for storage of parameter files and results. Backup: NO |

| Globus | Globus End-Point named fnal#wilson which you can use to transfer data in and out of WC-IC. Please refer to the documentation on globus.org on setting up a Globus Connect Personal if needed. |

Accessing internet addresses from worker nodes

A web proxy is needed to access internet url’s from the worker nodes. Use cases include downloading software via git or using wget to fetch files. Set the environment variables below from your shell running on a worker node.

export https_proxy=http://squid.fnal.gov:3128

export http_proxy=http://squid.fnal.gov:3128Other Documentation

- User documentation for Fermilab tape access tools is found in the Enstore/dCache User’s Guide.